I. Maintain my code, and solve the problem that I can't randomly read video frames from a video:

- Save the video into a Matfile

II. Collecting testing videos

- Zoom, Tilt, Viewpoint_change are ok

- Real Case Demo isn't not fine, because of unstable result of capturing color markers as known points

Thursday, 31 July 2014

Wednesday, 30 July 2014

Estimate Homography to do 2D to 3D integration (12): Extract known points in pairs automatically

I. Introduce the reference toolbox finding yesterday into my current system

- To combine the process of computing "transformation from Projection coordinate to World coordinate" all in MATLAB

- Continuously recording a video and compute the transformation mentioned above in every frame

- Need to check whether or not the "value of depth" is same as what shows in C++ version

II. Complete the modification mentioned above:

- Do verifications in 3 cases (zoom, tilt, viewpoint_change)

- Also, increase the number of known points (...as initial pair of points for EPnP) from 12 to 36, which means there are 9 points located in each color marker

- The result is improve a lot (...should be caused by the increasing number of initial pair of points as well as more accurate segmentation results): 1 to 2 pixel errors in Zoom and Tilt; 1 to 3 pixel errors in Viewpoint_change

- To combine the process of computing "transformation from Projection coordinate to World coordinate" all in MATLAB

- Continuously recording a video and compute the transformation mentioned above in every frame

- Need to check whether or not the "value of depth" is same as what shows in C++ version

II. Complete the modification mentioned above:

- Do verifications in 3 cases (zoom, tilt, viewpoint_change)

- Also, increase the number of known points (...as initial pair of points for EPnP) from 12 to 36, which means there are 9 points located in each color marker

- The result is improve a lot (...should be caused by the increasing number of initial pair of points as well as more accurate segmentation results): 1 to 2 pixel errors in Zoom and Tilt; 1 to 3 pixel errors in Viewpoint_change

Tuesday, 29 July 2014

Estimate Homography to do 2D to 3D integration (11): Extract known points in pairs automatically

I. Re-install OpenNI1:

- Would like to make my whole processes working in MATLAB

- Need to continuously recording a video from Kinect... my previous framework only output one image from Kinect via OpenNI2 in C++

- In either 32-bit or 64-bit version, only the following version of files can trigger Kinect:

* openni-win64-1.5.4.0-dev / openni-win32-1.5.4.0-dev

* SensorKinect093-Bin-Win64-v5.1.2.1 / SensorKinect093-Bin-Win32-v5.1.2.1

* nite-win64-1.5.2.21-dev / nite-win32-1.5.2.21-dev

- In OpenNI1, remember to edit the XML files in OpenNI as well as NiTE folders

II. Reference to do the transformation "Projective coordinate to World coordinate" in MATLAB

- Kinect MATLAB

- This toolbox can deal with the issue, but only OpenNI1 provide these functions

- Would like to make my whole processes working in MATLAB

- Need to continuously recording a video from Kinect... my previous framework only output one image from Kinect via OpenNI2 in C++

- In either 32-bit or 64-bit version, only the following version of files can trigger Kinect:

* openni-win64-1.5.4.0-dev / openni-win32-1.5.4.0-dev

* SensorKinect093-Bin-Win64-v5.1.2.1 / SensorKinect093-Bin-Win32-v5.1.2.1

* nite-win64-1.5.2.21-dev / nite-win32-1.5.2.21-dev

- In OpenNI1, remember to edit the XML files in OpenNI as well as NiTE folders

|

| Successful set up the environment of OpenNI1 |

II. Reference to do the transformation "Projective coordinate to World coordinate" in MATLAB

- Kinect MATLAB

- This toolbox can deal with the issue, but only OpenNI1 provide these functions

|

| Successful apply this reference toolbox in MATLAB |

Monday, 28 July 2014

Estimate Homography to do 2D to 3D integration (10): Extract known points in pairs automatically

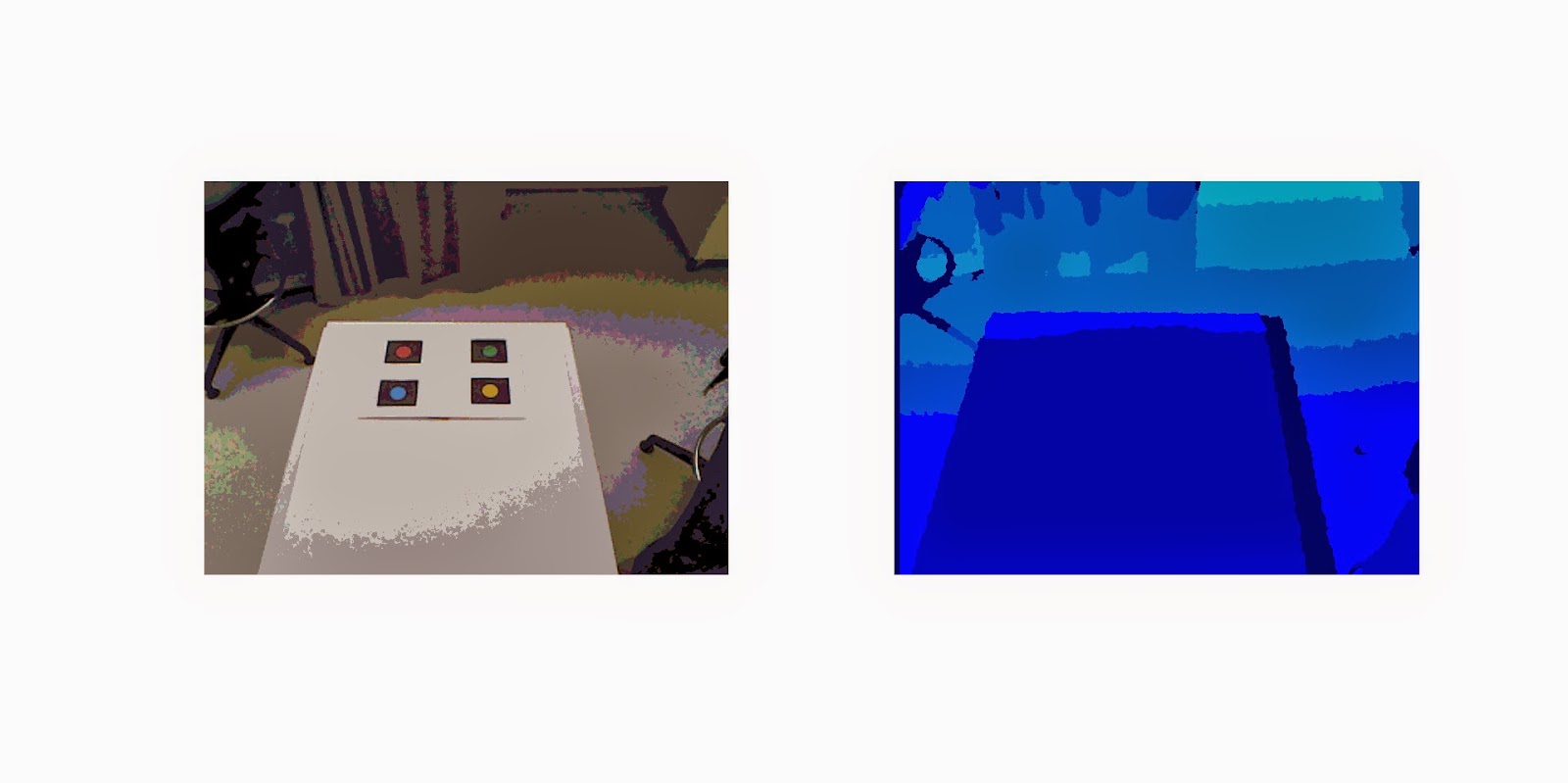

I. Re-making color markers because of the unstable result in last week

- Modified the size: 150x150 pixels in circle

- Choose recognizable colors via eye-tracker (...which has lower brightness):

yellow (255, 255, 0);

red (255, 0, 0);

light_blue (0; 255, 255);

green (0, 255, 0)

II. Testing videos

1. Simple cases (zoom, tilt, viewpoint_change)

2. Real cases (only show 3 of 4)

III. Experimental results

1. Simple cases:

- In zoom and tile cases... 1 to 3 pixels in average, 5 to 8 pixels is the worst

- In viewpoint_change case... 1 to 5 pixels in average, 8 to 12 pixels is the worst

IV. Real-time demo

- Now, the whole system can execute by directly reading video from a USB Webcam

- Reading recording videos to run the whole system is not the only choice

- Issue: delay time while running... because of the massive computation in our proposed system (EPnP -> Ray_tracing)

- The issue is not the major issue currently, but can try to improve it

- Modified the size: 150x150 pixels in circle

- Choose recognizable colors via eye-tracker (...which has lower brightness):

yellow (255, 255, 0);

red (255, 0, 0);

light_blue (0; 255, 255);

green (0, 255, 0)

II. Testing videos

1. Simple cases (zoom, tilt, viewpoint_change)

2. Real cases (only show 3 of 4)

III. Experimental results

1. Simple cases:

- In zoom and tile cases... 1 to 3 pixels in average, 5 to 8 pixels is the worst

- In viewpoint_change case... 1 to 5 pixels in average, 8 to 12 pixels is the worst

- Now, the whole system can execute by directly reading video from a USB Webcam

- Reading recording videos to run the whole system is not the only choice

- Issue: delay time while running... because of the massive computation in our proposed system (EPnP -> Ray_tracing)

- The issue is not the major issue currently, but can try to improve it

Saturday, 26 July 2014

Estimate Homography to do 2D to 3D integration (9): Extract known points in pairs automatically

I. Using bigger color markers

II. Draft code

- Using "the position of seeds generating from Particle Filter" as hints

- Do "morphological operation" + "the hints" to segment these color markers more accurately

- find the central position of points in each marker as known points to EPnP

III. Experimental results

- in Zoom, tilt, viewpoint_change cases, the error of pixels in (x,y) coordinates are 10 to 12 pixels in average

- The error is higher than using those smaller markers previously (...3 to 5 pixel error in each frame)

IV. Recording a video to simulate the real working case

- Has the information of possession point across frames

- Video:

V. Discussion with supervisor and report the preliminary results

- 10 pixel error in my case (640x480 in resolution) is acceptable, but still can be improved

- My 3rd experiment is not limited in scenario, just try to make a real work which can demonstrate our proposed framework works fine... especially with eye-tracker

II. Draft code

- Using "the position of seeds generating from Particle Filter" as hints

- Do "morphological operation" + "the hints" to segment these color markers more accurately

- find the central position of points in each marker as known points to EPnP

III. Experimental results

- in Zoom, tilt, viewpoint_change cases, the error of pixels in (x,y) coordinates are 10 to 12 pixels in average

- The error is higher than using those smaller markers previously (...3 to 5 pixel error in each frame)

IV. Recording a video to simulate the real working case

- Has the information of possession point across frames

- Video:

V. Discussion with supervisor and report the preliminary results

- 10 pixel error in my case (640x480 in resolution) is acceptable, but still can be improved

- My 3rd experiment is not limited in scenario, just try to make a real work which can demonstrate our proposed framework works fine... especially with eye-tracker

Friday, 25 July 2014

Estimate Homography to do 2D to 3D integration (8): Extract known points in pairs automatically

I. eye-tracker calibration to get the extrinsic parameter

- some images are inappropriate in this processing, need to re-generate.

- result:

II. Pre-testing the videos recorded by eye-tracker

- The brightness is lower than using the webcam, which make the color-based segmentation work incorrect.

- The small size of color markers need to be increased so that the viewing distance from eye-tracker to the object is far enough... fit the needs in real life

- some images are inappropriate in this processing, need to re-generate.

- result:

II. Pre-testing the videos recorded by eye-tracker

- The brightness is lower than using the webcam, which make the color-based segmentation work incorrect.

- The small size of color markers need to be increased so that the viewing distance from eye-tracker to the object is far enough... fit the needs in real life

Thursday, 24 July 2014

Draft Master Thesis (1)

I. Skimming one of the thesis from the past student in order to get more ideas about how to plan mine

II. Read some relevant references

II. Read some relevant references

Wednesday, 23 July 2014

Summary of Weekly Meeting - (19)

I. Summary

- What experiments are going to show in the end

(a) using chessboard to show the proposed method is robust (manually case)

(b) using color markers to show the proposed method is acceptable (more automatic case)

(c) using eye-tracker to make a demo... with some real accessories in surgery

- Ways to demonstrate results

(a) re-projection coordinates from 2D_Img onto 2D_Kinect_frame (in video)

(b) (x,y,t) graph related to re-projection coordinates on 2D_Kinect_frames

(c) (x,y,z) graph related to re-projection coordinates on 3D_world_cooridnate_system

- Next work

(a) using eye-tracker to substitute the Webcam

(b) tracking a colorful stuff via eye-tracker (...generate the possession points across time)

(c) show the possession points onto 2D_Kinect_frame (...based my framework)

II. Generating videos from eye-tracker in order to do camera calibration (...via calibration toolbox)

- What experiments are going to show in the end

(a) using chessboard to show the proposed method is robust (manually case)

(b) using color markers to show the proposed method is acceptable (more automatic case)

(c) using eye-tracker to make a demo... with some real accessories in surgery

- Ways to demonstrate results

(a) re-projection coordinates from 2D_Img onto 2D_Kinect_frame (in video)

(b) (x,y,t) graph related to re-projection coordinates on 2D_Kinect_frames

(c) (x,y,z) graph related to re-projection coordinates on 3D_world_cooridnate_system

- Next work

(a) using eye-tracker to substitute the Webcam

(b) tracking a colorful stuff via eye-tracker (...generate the possession points across time)

(c) show the possession points onto 2D_Kinect_frame (...based my framework)

II. Generating videos from eye-tracker in order to do camera calibration (...via calibration toolbox)

Tuesday, 22 July 2014

Estimate Homography to do 2D to 3D integration (7): Extract known points in pairs automatically

I. Results:

- Modification

(a) decrease the size of color markers into 20x20 pixels

(b) increase the number of known points from 3 to 6 (...as the input for EPnP alg)

- Modification

(a) decrease the size of color markers into 20x20 pixels

(b) increase the number of known points from 3 to 6 (...as the input for EPnP alg)

Friday, 18 July 2014

Estimate Homography to do 2D to 3D integration (5): Extract known points in pairs automatically

I. Making 2 experimental setups to solve the inaccurate issue in my current framework:

For each setup:

- 1 Kinect frame

- 4 Webcam videos including zoom, rotation,tilt, viewpoint_change

1. Decrease the size of color markers

2. Pre-setup other markers located at the central of the original markers

For each setup:

- 1 Kinect frame

- 4 Webcam videos including zoom, rotation,tilt, viewpoint_change

1. Decrease the size of color markers

| |

| Kinect frame |

2. Pre-setup other markers located at the central of the original markers

|

| Kinect frame |

Thursday, 17 July 2014

Summary of Weekly Meeting - (18)

I. Summary:

- Using Color-based Particle Filter mechanism to automatically locate the color markers (... with a real-time verification)

- Get 50% to 60% correction in "2D to 3D" work

- To improve the accuracy, here are possible ways to think:

1. decrease the size of color markers

2. increase the number of known points, which are extracted from the color markers

3. treat the information from Particle Filter as the hints; then, to make a better segmentation of color markers in Images

- May have chance to try wearable eye-tracker

- In terms of Master Thesis:

1. Inquire one thesis as reference from the students studying in here in the past

2. Start to think how to make experiments which is going to show in this thesis

- Using Color-based Particle Filter mechanism to automatically locate the color markers (... with a real-time verification)

- Get 50% to 60% correction in "2D to 3D" work

- To improve the accuracy, here are possible ways to think:

1. decrease the size of color markers

2. increase the number of known points, which are extracted from the color markers

3. treat the information from Particle Filter as the hints; then, to make a better segmentation of color markers in Images

- May have chance to try wearable eye-tracker

- In terms of Master Thesis:

1. Inquire one thesis as reference from the students studying in here in the past

2. Start to think how to make experiments which is going to show in this thesis

Tuesday, 15 July 2014

Estimate Homography to do 2D to 3D integration (4): Extract known points in pairs automatically

I. Preliminary results in using Particle Filter to locate color markers automatically:

- including zoom, tilt, rotation, viewpoint_change

II. Use the automatic method in my current framework... do see how it works in the process of "2D to 3D"

- Find the process is not accurate and stable enough because of the following reasons:

1. not accurate locate the central of the circular color markers

2. Webcam moves to fast

- including zoom, tilt, rotation, viewpoint_change

II. Use the automatic method in my current framework... do see how it works in the process of "2D to 3D"

- Find the process is not accurate and stable enough because of the following reasons:

1. not accurate locate the central of the circular color markers

2. Webcam moves to fast

Monday, 14 July 2014

Estimate Homography to do 2D to 3D integration (3): Extract known points in pairs automatically

I. Discuss with experienced people in order to inquire solutions in how to locate my color markers automatically.

II. One of the hint:

- particle filter + color

- particle filter tutorial

III. Read the code and documents, then do some modification in order to fit my code and needs.

II. One of the hint:

- particle filter + color

- particle filter tutorial

III. Read the code and documents, then do some modification in order to fit my code and needs.

Saturday, 12 July 2014

Estimate Homography to do 2D to 3D integration (2): Extract known points in pairs automatically

I. Keep draft the code for extract 4 color markers without initial points...

Thursday, 10 July 2014

Estimate Homography to do 2D to 3D integration (1): Extract known points in pairs automatically

I. Collecting data from Kinect and Webcam for verification implementing method

II. Draft code:

- focus on the color marker cases

II. Draft code:

- focus on the color marker cases

Wednesday, 9 July 2014

Summary of Weekly Meeting - (17)

I. Summary:

1. report the result of back-projection of "2D img to 3D world"

2. Need to pay more attention to make the system be more automatic in choosing the know point pairs

1. report the result of back-projection of "2D img to 3D world"

2. Need to pay more attention to make the system be more automatic in choosing the know point pairs

Tuesday, 8 July 2014

Estimate Homography to do 2D to 3D integration (39): Successfully implement the Ray-Tracing method

I. Debugging my proposed method of "Ray-Tracing" which is not stable in viewpoint_change case

II. Making video records of the results of "2D Img-to-3D world"

II. Making video records of the results of "2D Img-to-3D world"

Monday, 7 July 2014

Sunday, 6 July 2014

Estimate Homography to do 2D to 3D integration (37): Reading - Ray Tracing & 3D Coordinate from 2D calibrated camera

I. Debugging the Ray-Tracing codes...

II. Slightly alter the strategy in finding the hitting position in the 3D_Woold_Coord

II. Slightly alter the strategy in finding the hitting position in the 3D_Woold_Coord

Friday, 4 July 2014

Estimate Homography to do 2D to 3D integration (36): Reading - Ray Tracing & 3D Coordinate from 2D calibrated camera

I. Modified code:

- To set up a range for searching, instead of finding upper_bound and lower_bound of fZ in the World-Coord-Sys, using a method like "Bag-of-word strategy"

- Trying to decrease the complexity in finding the corresponding fZ in the Webcam-Coord-Sys

- Trying to think a better way to compute the similarity in order to find the most similar 3D point in the World-Coord-Sys

- To set up a range for searching, instead of finding upper_bound and lower_bound of fZ in the World-Coord-Sys, using a method like "Bag-of-word strategy"

- Trying to decrease the complexity in finding the corresponding fZ in the Webcam-Coord-Sys

- Trying to think a better way to compute the similarity in order to find the most similar 3D point in the World-Coord-Sys

Thursday, 3 July 2014

Estimate Homography to do 2D to 3D integration (35): Reading - Ray Tracing & 3D Coordinate from 2D calibrated camera

I. Draft code of 3D coordinate from 2D calibrated camera:

One of the reference of Pseudo-code: http://en.wikipedia.org/wiki/3D_pose_estimation

One of the reference of Pseudo-code: http://en.wikipedia.org/wiki/3D_pose_estimation

Wednesday, 2 July 2014

Summary of Weekly Meeting - (16)

I. Expected work and results before next Meeting:

- Accomplish "2D-ImgPlane-Coordinate backTo 3D-world-Coordinate" process (...base on "Ray_Tracing" technique)

- Showing the known points as well as testing points at the corresponding position in the Kinect 2D image (...i.e., 3D-world-coordinate to 2D-projection-coordinate related Kinect)

- Do "Feature extraction" process, and use these features to substitute current points which are chosen manually (...i.e., the crosses located on a chessboard)

II. Draft codes:

- Output the projection coordinates and world coordinate information of each pixels from OpenNI2 (...C++ part)

- Save the Kinect outputs, including projection coordinates & world coordinates, of each pixels to be a mat-file.

- Automatically find the corresponding position in the world coordinate to the known a 2D point.

- Check the part of "Transform from 3D-world -> 3D-Webcam"

- Check the part of "3D-Webcam-picture -> Transform from 3D-world"

- Check the part of "2D-Webcam-picture -> 3D-Webcam"

* "Xc_inv_show" is the original result after the transition, but it's just a "unit", this needs to press the "z_var"

* "z_var" is as a scaling factor which points out where will be the hitting position of the first point = (0.12235, -0.066628, 1))

- Make a draft of "Ray_Tracing" and think about how to check every "Z-coordinate" brightly without searching exhaustly.

- Accomplish "2D-ImgPlane-Coordinate backTo 3D-world-Coordinate" process (...base on "Ray_Tracing" technique)

- Showing the known points as well as testing points at the corresponding position in the Kinect 2D image (...i.e., 3D-world-coordinate to 2D-projection-coordinate related Kinect)

- Do "Feature extraction" process, and use these features to substitute current points which are chosen manually (...i.e., the crosses located on a chessboard)

II. Draft codes:

- Output the projection coordinates and world coordinate information of each pixels from OpenNI2 (...C++ part)

- Save the Kinect outputs, including projection coordinates & world coordinates, of each pixels to be a mat-file.

- Automatically find the corresponding position in the world coordinate to the known a 2D point.

- Check the part of "Transform from 3D-world -> 3D-Webcam"

- Check the part of "3D-Webcam-picture -> Transform from 3D-world"

- Check the part of "2D-Webcam-picture -> 3D-Webcam"

* "Xc_inv_show" is the original result after the transition, but it's just a "unit", this needs to press the "z_var"

* "z_var" is as a scaling factor which points out where will be the hitting position of the first point = (0.12235, -0.066628, 1))

- Make a draft of "Ray_Tracing" and think about how to check every "Z-coordinate" brightly without searching exhaustly.

Tuesday, 1 July 2014

Estimate Homography to do 2D to 3D integration (34): Reading - Ray Tracing & 3D Coordinate from 2D calibrated camera

I. Studying the topic:

[Ray Tracing]

- http://www.ics.uci.edu/~gopi/CS211B/RayTracing%20tutorial.pdf

- Tutorial

[3D Coordinate from 2D calibrated camera]

- CALIBRATION OF A 2D-3D – CAMERA SYSTEM

- Plane-based calibration of a projector-camera system

- http://en.wikipedia.org/wiki/3D_pose_estimation

- Iterative_Closest_Point

[Calibration Q&A]

- http://imagelab.ing.unimore.it/visor_test/faq_calibration.txt

- Geometric Camera Parameters

- Camera Calibration

II. Draft code for preliminary test:

- Using the output "Extrinsic Matrix" generated from EPnP to test inverse transformation (...Webcam-Coordinate 3D points back to the corresponding World-Coordinate 3D points). The result shows the position computing by using the Extrinsic Matrix of EPnP is correct.

- So, from 3D Webcam-Coordinate to the corresponding 3D World-Coordinate is no problem.

- Next, checking the part of inverse transformation from 2D Webcam-Img-Coordinate to the corresponding 3D Webcam-Coordinate

[Ray Tracing]

- http://www.ics.uci.edu/~gopi/CS211B/RayTracing%20tutorial.pdf

- Tutorial

[3D Coordinate from 2D calibrated camera]

- CALIBRATION OF A 2D-3D – CAMERA SYSTEM

- Plane-based calibration of a projector-camera system

- http://en.wikipedia.org/wiki/3D_pose_estimation

- Iterative_Closest_Point

[Calibration Q&A]

- http://imagelab.ing.unimore.it/visor_test/faq_calibration.txt

- Geometric Camera Parameters

- Camera Calibration

II. Draft code for preliminary test:

- Using the output "Extrinsic Matrix" generated from EPnP to test inverse transformation (...Webcam-Coordinate 3D points back to the corresponding World-Coordinate 3D points). The result shows the position computing by using the Extrinsic Matrix of EPnP is correct.

- So, from 3D Webcam-Coordinate to the corresponding 3D World-Coordinate is no problem.

- Next, checking the part of inverse transformation from 2D Webcam-Img-Coordinate to the corresponding 3D Webcam-Coordinate

|

Subscribe to:

Comments (Atom)