I. Finish:

- Chapter 3: Proposed method

- around 8400 words, 61 pages

II. Start writing:

- Chapter 4: Experimental results

- Have plans and structure about how to write this section

Thursday, 21 August 2014

Tuesday, 19 August 2014

Draft Master Thesis (7) & (8)

* This is the memo of 18/08/2014 to today.

I. The parts of writing which is done as following:

- Around 60% of Chapter 3 (Proposed Method) is done.

- Modifying and adding more paragraphs in Chapter 2 (Introduction and literature review)

- Inserting relevant pictures in Chapter 2 and Chapter 3

- Collecting pictures to help to illustrate all ideas in Chapter 2 and Chapter 3

- 38 pages, 5077 words in total now

- 38 pages, 5077 words in total now

II. Planning about how to organize Chapter 4 (Experimental Results) and Chapter 5 (discussion)

Sunday, 17 August 2014

Estimate Homography to do 2D to 3D integration (21): Extract known points in pairs automatically

I. Generating another experimental results of zoom, tilt, viewpoint_change and rotation by using webcam:

- This results will be a demonstration to illustrate following 4 points:

1. How are we doing and validating our proposed system, before adapting the eye-tracker

2. Why we try to use BW segmentation to improve the result of Particle Filtering in next step

3. Why we would like to increase the size of color markers

4. What's the differences and issues while we choose the eye-tracker instead of using the webcam

- The videos, graphs, precision, and accuracy can be downloaded via: https://db.tt/kSwzkZ6e

II. Debugging and editing my code in doing "3D Rendering":

- Dealing with the problem that my proposed method is not able to show fixations in World Coordinate without overlap across frames, while the system is automatically running

- Changing the color and type of marker in order to make the markers become more clear

- The updating videos, graphs can be downloaded via: https://db.tt/eUmPKGKH

(... the original results can be found via: https://db.tt/2sRENfGC)

- This results will be a demonstration to illustrate following 4 points:

1. How are we doing and validating our proposed system, before adapting the eye-tracker

2. Why we try to use BW segmentation to improve the result of Particle Filtering in next step

3. Why we would like to increase the size of color markers

4. What's the differences and issues while we choose the eye-tracker instead of using the webcam

- The videos, graphs, precision, and accuracy can be downloaded via: https://db.tt/kSwzkZ6e

II. Debugging and editing my code in doing "3D Rendering":

- Dealing with the problem that my proposed method is not able to show fixations in World Coordinate without overlap across frames, while the system is automatically running

- Changing the color and type of marker in order to make the markers become more clear

- The updating videos, graphs can be downloaded via: https://db.tt/eUmPKGKH

(... the original results can be found via: https://db.tt/2sRENfGC)

Friday, 15 August 2014

Estimate Homography to do 2D to 3D integration (20): Extract known points in pairs automatically

I. The results of 3D rendering:

* Haven't give a function to let user play around with these 2 demos. Currently, users only can watch these demo, after I collect the extracted frames and combine together into video streams.

* The Viewing Angle is set by me... each demo chooses 3 viewing angles

* Each video shows the view from Kinect camera in the World Coordinate System, also, shows the 4 fixations by using "yellow cross (ground truth)" and "red cross (the result of our proposed method)"

* Here is the download link in order to see more clear demos... shows those 8 crosses: 3D rendering videos

- Demo 1:

- Demo 2:

* Haven't give a function to let user play around with these 2 demos. Currently, users only can watch these demo, after I collect the extracted frames and combine together into video streams.

* The Viewing Angle is set by me... each demo chooses 3 viewing angles

* Each video shows the view from Kinect camera in the World Coordinate System, also, shows the 4 fixations by using "yellow cross (ground truth)" and "red cross (the result of our proposed method)"

* Here is the download link in order to see more clear demos... shows those 8 crosses: 3D rendering videos

- Demo 1:

Draft Master Thesis (6)

I. Focus on writing the "Implementation" part, which will mention...:

- Introducing all components of our proposed system

- Describing the way to implement

- Collecting support images and graphs

- Gathering all materials used in my implementation while I work on it

- Surveying related reference, website, toolbox

II. Use sometime to write a bit of "Results" part:

- Introducing all components of our proposed system

- Describing the way to implement

- Collecting support images and graphs

- Gathering all materials used in my implementation while I work on it

- Surveying related reference, website, toolbox

II. Use sometime to write a bit of "Results" part:

Thursday, 14 August 2014

Estimate Homography to do 2D to 3D integration (19): Extract known points in pairs automatically

I. Studying for "how to make 3D rendering":

- Coding

- Find suitable source as a demonstration

II. Modifying the graphs of (x, y, t), (X, Y, Z):

- (x, y, y)

- (X, Y, Z)

- Coding

- Find suitable source as a demonstration

II. Modifying the graphs of (x, y, t), (X, Y, Z):

- (x, y, y)

Wednesday, 13 August 2014

Summary of Weekly Meeting - (20)

I. Make a demo of 3D rendering:

- This demo should look like this: https://www.youtube.com/watch?v=r26fmr0UpBs

II. Possible additional experiments to do in order to expand the content of the chapter of "experiment results":

1. Comparing the results of proposed system via automatically locate fixation points and manually mark those fixation points

2. Discussion the goodness parts and the illness parts

3. Do some validation of each component... (minor)

III. Need to well-organized the results of precision, accuracy, graphs and extracted frames:

- Shows title, unit in the graphs of (x,y,t) and (x,y,z)

- Makes clear table to demonstrate the statistics of precision and accuracy

- This demo should look like this: https://www.youtube.com/watch?v=r26fmr0UpBs

II. Possible additional experiments to do in order to expand the content of the chapter of "experiment results":

1. Comparing the results of proposed system via automatically locate fixation points and manually mark those fixation points

2. Discussion the goodness parts and the illness parts

3. Do some validation of each component... (minor)

III. Need to well-organized the results of precision, accuracy, graphs and extracted frames:

- Shows title, unit in the graphs of (x,y,t) and (x,y,z)

- Makes clear table to demonstrate the statistics of precision and accuracy

Tuesday, 12 August 2014

Draft Master Thesis (5)

I. Finish three chapters:

- Acknowledgement

- Introduction

- Literature review (90%)

II. Other parts also have contents:

- Bibliography

- Figures related to the whole thesis

- titles of each chapter

- Acknowledgement

- Introduction

- Literature review (90%)

II. Other parts also have contents:

- Bibliography

- Figures related to the whole thesis

- titles of each chapter

Monday, 11 August 2014

Draft Master Thesis (4)

I. Make the preliminary decision of...:

- How many chapters to write?

- What's these chapters?

- Make a plan about what are the additional experiments to show in the thesis (...expect precision, accuracy, and extracted frames)

- How many chapters to write?

- What's these chapters?

- Make a plan about what are the additional experiments to show in the thesis (...expect precision, accuracy, and extracted frames)

Friday, 8 August 2014

Draft Master Thesis (3)

I. Collecting experimental results

II. Writing the part of components of our proposed method

II. Writing the part of components of our proposed method

Estimate Homography to do 2D to 3D integration (18): Extract known points in pairs automatically

I. Writing codes to draw graphs of (x, y, t) and (X, Y, Z)

* Here, only shows the results of red color marker

* In each video type, 1st image shows (x, y, t), 2nd image shows (X, Y, Z)

- x, y: coordinates in 2D (... the position located on a Kinect frame)

- t: frame no.

- X, Y, Z: coordinates in 3D (... the position located in the World)

- Zoom:

- Viewpoint_change:

- Tilt:

- Real case 1:

- Real case 2:

* Here, only shows the results of red color marker

* In each video type, 1st image shows (x, y, t), 2nd image shows (X, Y, Z)

- x, y: coordinates in 2D (... the position located on a Kinect frame)

- t: frame no.

- X, Y, Z: coordinates in 3D (... the position located in the World)

- Zoom:

- Viewpoint_change:

- Tilt:

- Real case 1:

- Real case 2:

Thursday, 7 August 2014

Estimate Homography to do 2D to 3D integration (17): Extract known points in pairs automatically

I. Draft code for compute "Precision and Accuracy" of these 5 videos of demo:

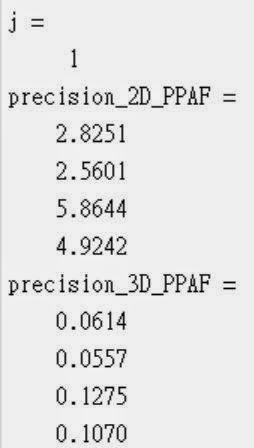

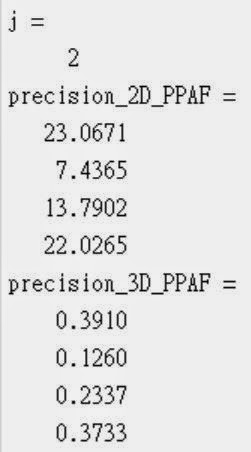

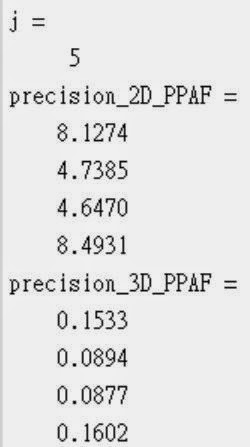

[ Precision Part ]

* There are 8 rows in the picture marked "Precision". Each of them are corresponding to 1 color marker

* The order of these color markers are: yellow, red, blue, green

* Precision_2D_PPAF is related to the precision in 2D; Precision_3D_PPAF is related to 3D

[ Accuracy Part ]

* There are 4 rows of 2 "ans" in the picture marked "Accuracy". Each of them are corresponding to 1 color marker

* The order of these color markers are: yellow, red, blue, green

* nframes: total frames in that video which are used for experiments (including the 1st initial frames, but in the following computation, the 1st frame is not included)

* The information of accuracy_2D includes the following stuffs:

- 1 column: offset <= 3 pixels in 2D, after executing "2D-to-3D Re-projection" computation

- 2 column: offset <= 5 pixels

- 3 column: offset <= 8 pixels

- 4 column: offset <= 10 pixels

- 5 column: offset <= 15 pixelsi

- 6 column: offset <= 20 pixels

- 7 column: offset > 20 pixels

- Zoom:

- Viewpoint_change:

- Tilt:

- Real case 2:

- Real case 3:

[ Precision Part ]

* There are 8 rows in the picture marked "Precision". Each of them are corresponding to 1 color marker

* The order of these color markers are: yellow, red, blue, green

* Precision_2D_PPAF is related to the precision in 2D; Precision_3D_PPAF is related to 3D

[ Accuracy Part ]

* There are 4 rows of 2 "ans" in the picture marked "Accuracy". Each of them are corresponding to 1 color marker

* The order of these color markers are: yellow, red, blue, green

* nframes: total frames in that video which are used for experiments (including the 1st initial frames, but in the following computation, the 1st frame is not included)

* The information of accuracy_2D includes the following stuffs:

- 1 column: offset <= 3 pixels in 2D, after executing "2D-to-3D Re-projection" computation

- 2 column: offset <= 5 pixels

- 3 column: offset <= 8 pixels

- 4 column: offset <= 10 pixels

- 5 column: offset <= 15 pixelsi

- 6 column: offset <= 20 pixels

- 7 column: offset > 20 pixels

- Zoom:

|

| Precision: 1st 4 values of ans are in 2D, 2nd 4 values of ans are in 3D |

|

| Accuracy: offset of 4 color markers [ <=3 pixels | <=5 pixels | <=8 pixels | <=10 pixels | <=15 pixels | <=20 pixels | >20 pixels ] |

- Viewpoint_change:

|

| Precision: 1st 4 values of ans are in 2D, 2nd 4 values of ans are in 3D |

|

| Accuracy: offset of 4 color markers [ <=3 pixels | <=5 pixels | <=8 pixels | <=10 pixels | <=15 pixels | <=20 pixels | >20 pixels ] |

- Tilt:

|

| Precision: 1st 4 values of ans are in 2D, 2nd 4 values of ans are in 3D |

|

| Accuracy: offset of 4 color markers [ <=3 pixels | <=5 pixels | <=8 pixels | <=10 pixels | <=15 pixels | <=20 pixels | >20 pixels ] |

- Real case 2:

|

| Precision: 1st 4 values of ans are in 2D, 2nd 4 values of ans are in 3D |

|

| Accuracy: offset of 4 color markers [ <=3 pixels | <=5 pixels | <=8 pixels | <=10 pixels | <=15 pixels | <=20 pixels | >20 pixels ] |

- Real case 3:

|

| Precision: 1st 4 values of ans are in 2D, 2nd 4 values of ans are in 3D |

|

| Accuracy: offset of 4 color markers [ <=3 pixels | <=5 pixels | <=8 pixels | <=10 pixels | <=15 pixels | <=20 pixels | >20 pixels ] |

Wednesday, 6 August 2014

Estimate Homography to do 2D to 3D integration (16): Extract known points in pairs automatically

I. Extract frames from recorded videos:

- These are for final presentation

- zoom:

- viewpoint_change:

- tilt:

- real case 1:

- real case 2:

- These are for final presentation

- zoom:

- viewpoint_change:

- tilt:

- real case 1:

- real case 2:

Tuesday, 5 August 2014

Estimate Homography to do 2D to 3D integration (15): Extract known points in pairs automatically

I. Part of Demo of "real-case"

II. Need to assign the possession points onto these 2 real-case videos, or manually mark these possession points via my GUI

- Info of possession points of real-case-1:

- Info of possession points of real-case-2:

II. Need to assign the possession points onto these 2 real-case videos, or manually mark these possession points via my GUI

- Info of possession points of real-case-1:

- Info of possession points of real-case-2:

Monday, 4 August 2014

Estimate Homography to do 2D to 3D integration (14): Extract known points in pairs automatically

I. Find the limitation of my proposed system by collecting real-cases for demo:

- The brightness of the environment causes unstable result of color recognition from Particle Filter Method

- Not powerful enough to do "look at the color markers, and look to other place, then look to the color markers again" process: not as stable as using the Webcam (...Eye-tracker shows lower brightness of color comparing to Webcam in the extracted video frames)

II. The frame rate of Webcam is not a constant value; instead of using a Webcam, adapting Eye-Tracker to do every experiments...

- Figure out how to make two videos (one from Kinect, another from eye-tracker) can play simultaneously: Frame Rate is the hint

- Kinect: 30 FPS, Eye-Tracker: 24FPS

- Here is the way to do:

III. Part of experimental results for final demo

- Zoom, Tilt, Viewpoint_Change

- The brightness of the environment causes unstable result of color recognition from Particle Filter Method

- Not powerful enough to do "look at the color markers, and look to other place, then look to the color markers again" process: not as stable as using the Webcam (...Eye-tracker shows lower brightness of color comparing to Webcam in the extracted video frames)

II. The frame rate of Webcam is not a constant value; instead of using a Webcam, adapting Eye-Tracker to do every experiments...

- Figure out how to make two videos (one from Kinect, another from eye-tracker) can play simultaneously: Frame Rate is the hint

- Kinect: 30 FPS, Eye-Tracker: 24FPS

- Here is the way to do:

III. Part of experimental results for final demo

- Zoom, Tilt, Viewpoint_Change

Friday, 1 August 2014

Draft Master Thesis (2)

I. Survey relevant references:

- Particle Filter

- EPnP

- Ray Tracing

- Camera Calibration

- Similar work to mine

II. Modified previous "document of literature review"

III. Writing Thesis

- Particle Filter

- EPnP

- Ray Tracing

- Camera Calibration

- Similar work to mine

II. Modified previous "document of literature review"

III. Writing Thesis

Subscribe to:

Comments (Atom)